Have you ever run a container and it exits successfully (code 0) but with an OutOfMemory error at the same time, leaving you confused and questioning your life decisions?

Let me explain the how and why by firstly talking about the OutOfMemory Killer.

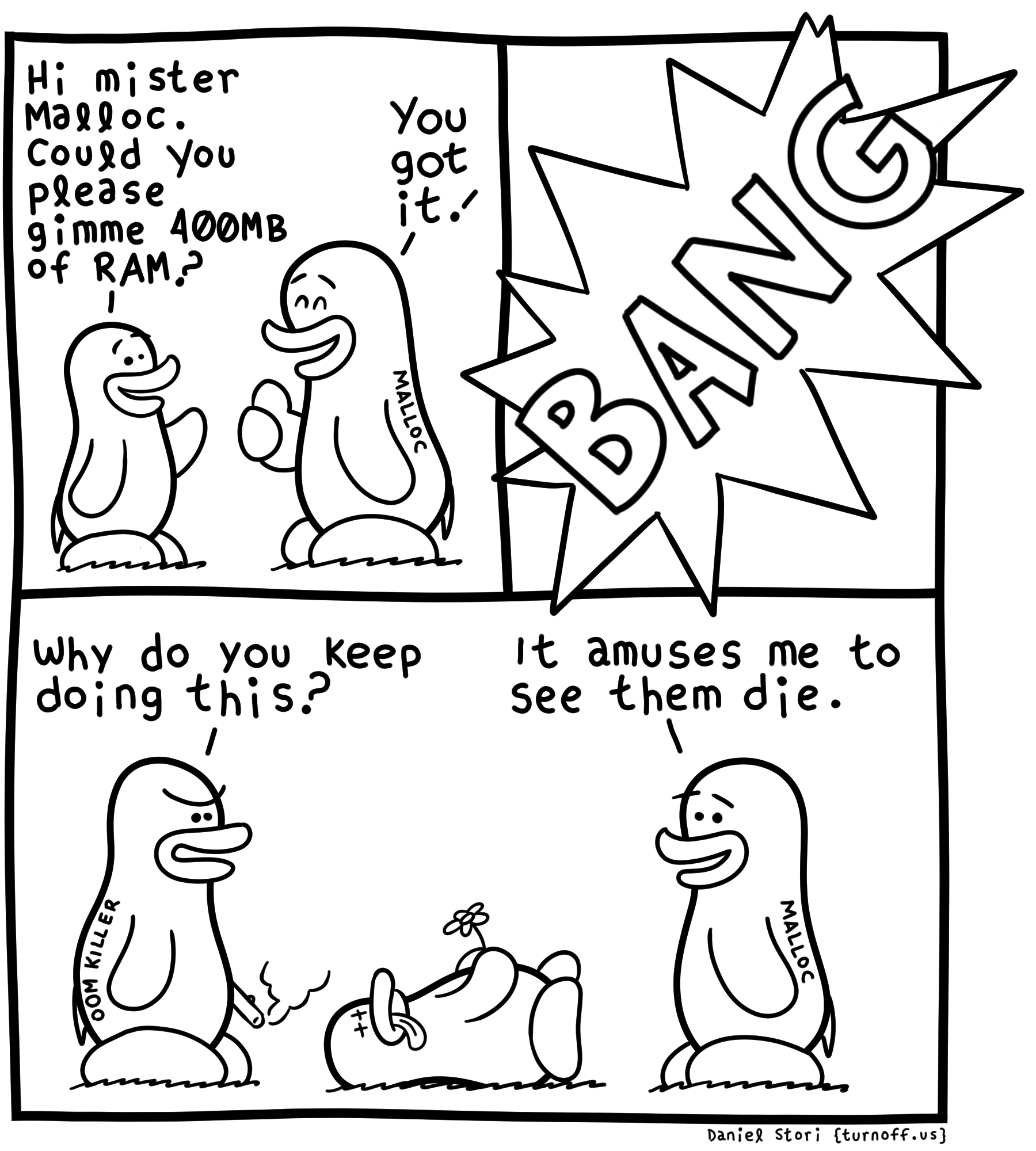

OutOfMemory Killer ☠️

Also known as the OOM Killer, this is a mechanism built into the Linux kernel whose main purpose is to handle situations when the system is critically low on memory (physical or swap).

It verifies that the system is truly out of memory and will select a process to kill it to free up memory. The selection is based on a process’s OOM Score.

What is OOM Score?

Every running process in Linux has an oom_score. The operating system calculates it based on several criteria, which are mainly influenced by the amount of memory the process is using. Typically, the oom_score varies between -1000 and 1000. The process with the highest oom_score gets killed first by OOM Killer when the system starts running critically low on memory.

You can check the oom_score of a running process using its process ID:

cat /proc/process_ID/oom_score

Okay then, why did I get exit code 0 and OutOfMemory at the same time?

This happens when a “leaf” process that’s not the main process defined in your Docker image’s ENTRYPOINT or CMD, attempts to use more memory than is available. Depending on your application, another process can be spawned to perform a certain action, and if that process attempts to use more memory than what’s available, the OOM Killer will spring into action and kill that process.

Since the leaf process was not the main process for your container, the main process can continue running until it completes its main job or it can run forever. In this situation, the Docker runtime takes note of the OOM event, even though it wasn’t for the main process. Let’s see this whole thing in action.

Locally replicate the exit code 0 and OOM combo…

To replicate this combination locally, you’ll need to have Docker running (obviously) and the jq tool installed.

- Run a simple NGINX container with a hard memory limit of 1GB.

docker run -d -p 8000:80 --memory 1G nginx:latest

- Exec into the running container:

docker exec -it CONTAINER_ID /bin/bash

- Install the stress package:

root@:/# apt update && apt install -y stress

- Run stress with the following arguments:

stress -c 2 -m 4 —vm-bytes 512M

- The above command will try to use more memory than is available. You should see the output below. Notice the second line: worker ID got signal 9.

stress: info: [168] dispatching hogs: 2 cpu, 0 io, 4 vm, 0 hdd

stress: FAIL: [168] (425) <-- worker 172 got signal 9

stress: WARN: [168] (427) now reaping child worker processes

stress: FAIL: [168] (461) failed run completed in 1s

- From another terminal shell, stop the container with

docker stopwhich will gracefully terminate the main process in the container:

docker stop CONTAINER_ID

- Check the container details using docker inspect:

docker inspect CONTAINER_ID | jq ".[0].State"

- You should see a similar output as below:

{

"Status": "exited",

"Running": false,

"Paused": false,

"Restarting": false,

"OOMKilled": true,

"Dead": false,

"Pid": 0,

"ExitCode": 0,

"Error": "",

"StartedAt": "2024-09-27T08:18:42.210404427Z",

"FinishedAt": "2024-09-27T08:27:21.077232458Z"

}

Note that OOMKilled is true and ExitCode is 0. Since stress was not the main process in the container, the main process can continue forever and exit successfully.

References: